Motion Compensation in Radiotherapy

Project Description

In radiosurgery, the accurate targeting of tumors anywhere in the body has become possible since several years. Current clinical applications manage to deliver a lethal dose of radiation to the cancerous region with an accuracy of about 2-3mm. Nevertheless, the tumour motion (induced by breathing, hearth beat or shifting of the patient) has to be compensated to be able to perform a precise irradiation. Conventional approaches are based on gating techniques, irradiation of tumour at specific phases of the respiration, or increasing of the target volume, until the complete tumour movement is covered.

An approach, developped in a collaboration of Prof. Schweikard and accuray Inc., Sunnyvale, CA, deals with this problem by tracking the motion of the patient's chest or abdomen using stereoscopic infrared camera systems. A mathematic model, the correlation model, can be computed based on the information of the external surrogates, which allows conclusions of the actual tumour movement. A robot based radiotherapy system, as e.g. the CyberKnife system, can use this information to compensate patient and respiration movements in real time.

Areas of Research:

Prediction of Breathing Motion:

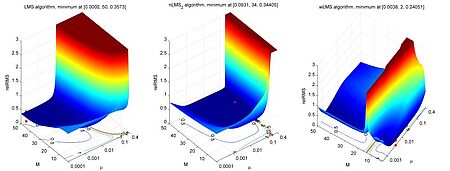

A new problem arising from this approach is the fact that neither the recording of the patient's position nor the repositioning of the robotic system is instantaneous. Currently employed systems exhibit delays between approximately 65 and 300 ms. This results in targeting errors of up to several millimeters. The systematic error can be reduced by time series prediction of the external surrogates. Beside the classical regression approaches, as e.g. the least mean square algorithm, current research focuses on machine learning approaches based on kernel methods and statistical learning. The continuous improvement of these algorithms is one main topic of this research project.

Camera setup to capture respiratory motion

In our lab, we also measure actual human respiration. To do so, 20 infrared LEDs were attached to the chest of a test person. These LEDs were subsequently tracked using a high-speed IR tracking system (atracsys accuTrack compact). To be able to accurately position the camera and to ensure camera stability, the camera was mounted on a robotic arm. A short sequence of the respiratory motion recorded can be seen in the following movie.

In our lab, we also measure actual human respiration. To do so, 20 infrared LEDs were attached to the chest of a test person. These LEDs were subsequently tracked using a high-speed IR tracking system (atracsys accuTrack compact). To be able to accurately position the camera and to ensure camera stability, the camera was mounted on a robotic arm. A short sequence of the respiratory motion recorded can be seen in the following movie.

Detection of Tumor Motion (Correlation Models):

Once the motion of the patient's chest is known, conclusions about the position of the tumor are drawn. This is done by using a correlation model mimicking the relation between surface motion and target motion. How this model is constructed and validated is also a matter of ongoing research.

Multivariate Motion Compensation:

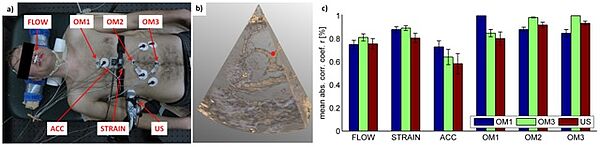

Current clinical praxis is the use of three optical infrared markers, which can be placed at any position of the chest or abdomen of the patient. As several studies have indicated, the correlation accuracy depends significantly on the marker placement and on the breathing characteristics of the patient. We investigate how this dependency can be reduced using multivariate measurement setups, e.g. acceleration, strain, air flow, surface electromyography (EMG). Aim of this research is the development of multi-modal prediction and correlation models. Special focus is placed on real time feature detection algorithms to detect the most relevant and least redundant sensors to increase the robustness of the complete system.

a) Sensor setup of a multivariate measurement with flow sensor (FLOW), optical marker 1-3 (OM 1-3), acceleration sensor (ACC), strain sensor (STRAIN) and ultrasound transducer (US),

b) Example of an ultrasound image and the selected target area (red dot) in the liver,

c) mean absolute correlation coefficients and standard deviation of all external sensors with respect to OM1, OM3 and US.

Probabilistic Motion Compensation:

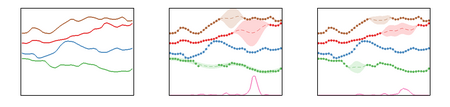

Up to this point, surrogate based motion compensation requires a prediction and correlation model. The two models are used in sequence, meaning that the output of first model is used as the input to the second model (the order is arbitrary). Consequently, errors associated with the first model influence the result of the second model. In this context, Multi-Task Gaussian Process (MTGP) models have been investigated. These models offer for the first time the possibility to solve efficiently both problems and within one model. Studies have shown that this lead to a reduction of the total error. MTGP models are an extension of Gaussian Processes models, which are frequently used within the field of machine learning for regression tasks. The essential advantage of MTGPs is that multiple signals which are acquired at different sampling frequencies (even discrete time points) can be modelled simultaneously. The prediction accuracy is increased as the correlation between the signals is learned automatically.

MTGP Toolbox

The MTGP framework is very flexible and can be used for various biomedical problems as for instance the analysis of vital-sign data of intensive care unit patients. In cooperation with the Computational Health Informatics Lab (University of Oxford) a Matlab toolbox was developed. A detailed description of the toolbox and several illustrative examples can be found here. [Link Toolbox]

Publications

2002

"Fiducial-Less Compensation of Breathing Motion in Lung Cancer Radiosurgery" 2002.

2000

Robotic Motion Compensation for Respiratory Movement during Radiosurgery, Journal of Computer-Aided Surgery , vol. 5, no. 4, pp. 263-277, 2000. Wiley-Liss.

| DOI: | 10.3109/10929080009148894 |

| File: | 10929080009148894 |

1998

Image-Guided Stereotactic Radiosurgery: The Cyberknife, Barnett, G., Roberts, D., Guthrie, B. (ed.), McGraw Hill, 1998.

1995

Future health: computers and medicine in the twenty-first century., .... New York, NY, USA: St. Martin's Press, Inc., 1995.

| ISBN: | 0-312-12602-6 |

- Research

- Robotics Laboratory (RobLab)

- OLRIM

- MIRANA

- Robotik auf der digitalen Weide

- KRIBL

- Ultrasound Guided Radiation Therapy

- Digitaler Superzwilling: Projekt TWIN-WIN

- - Finished Projects -

- High-Accuracy Head Tracking

- Neurological Modelling

- Modelling of Cardiac Motion

- Motion Compensation in Radiotherapy

- Navigation and Visualisation in Endovascular Aortic Repair (Nav EVAR)

- Autonome Elektrofahrzeuge als urbane Lieferanten

- Goal-based Open ended Autonomous Learning

- Transcranial Electrical Stimulation

- Treatment Planning

- Transcranial Magnetic Stimulation

- Navigation in Liver Surgery

- Stereotactic Micronavigation

- Surgical Microscope

- Interactive C-Arm

- OCT-based Neuro-Imaging

Floris Ernst

Gebäude 64

,

Raum 97

floris.ernst(at)uni-luebeck.de

+49 451 31015200

Ralf Bruder

Gebäude 64

,

Raum 92

ralf.bruder(at)uni-luebeck.de

+49 451 31015205